IBM Research has developed a groundbreaking analog AI chip for deep learning inference that showcases the critical building blocks of a scalable mixed-signal architecture. This energy-efficient chip aims to overcome the limitations of traditional digital computing architectures when running deep neural networks (DNNs). While DNNs have revolutionized AI, their performance and energy efficiency are restricted when executed on digital systems.

To address this challenge, IBM Research has been exploring analog in-memory computing, also known as analog AI. This approach is inspired by how neural networks function in biological brains, where the strength of synapses determines neuron communication. In analog AI systems, synapses are represented by the conductance values of nanoscale resistive memory devices, such as phase-change memory (PCM). PCM devices store synaptic weights locally and can switch between amorphous and crystalline phases to represent a continuum of values, rather than discrete 0s and 1s. These weights can be stored in the physical atomic configuration of each PCM device, and they are non-volatile, meaning they are retained even when the power supply is switched off.

To turn analog AI into reality, two significant challenges must be overcome. First, memory arrays must compute with a level of precision comparable to digital systems, and second, they must seamlessly interface with digital compute units and communication fabric on the analog AI chip.

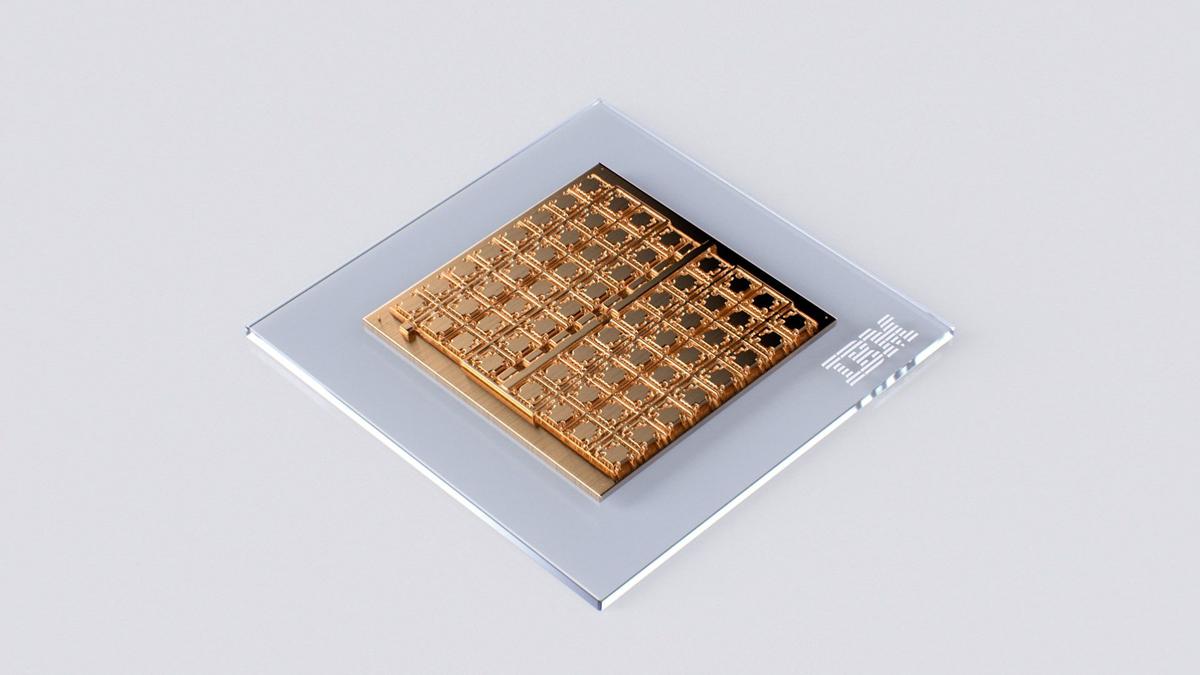

In a recent publication in Nature Electronics, IBM Research introduced a mixed-signal analog AI chip that tackles these challenges. This chip, fabricated at IBM's Albany NanoTech Complex, consists of 64 analog in-memory compute cores, each containing a 256-by-256 crossbar array of synaptic unit cells. Each core integrates compact, time-based analog-to-digital converters for transitioning between analog and digital domains. Digital processing units are also integrated within each core to perform neuronal activation functions and scaling operations.

Each compute core can handle computations associated with a layer of a DNN model. The synaptic weights are encoded as analog conductance values in the PCM devices. Additionally, a global digital processing unit is integrated into the chip to handle more complex operations crucial for certain types of neural networks. Digital communication pathways are present at the chip interconnects of all the cores and the global processing unit.

Using this chip, IBM Research conducted a comprehensive study on analog in-memory computing precision and achieved an accuracy of 92.81% on the CIFAR-10 image dataset. This accuracy is among the highest reported for chips utilizing similar technology. The chip demonstrates high compute efficiency, with more than 15 times higher throughput per area for 8-bit input-output matrix multiplications compared to previous multi-core, in-memory computing chips based on resistive memory.

The analog AI chip combines area- and energy-efficient analog-to-digital converters, highly linear multiply-accumulate compute functionalities, and capable digital compute blocks. This architecture, combined with a massively parallel data-transport design demonstrated in a 34-tile chip presented at the IEEE VLSI symposium in 2021, lays the foundation for a fast and low-power analog AI inference accelerator chip.

IBM Research envisions an accelerator architecture utilizing multiple analog in-memory computing tiles interconnected with specialized digital compute cores on a massively parallel 2D mesh. Alongside hardware-aware training techniques developed in recent years, these accelerators are expected to deliver neural network accuracies equivalent to software implementations across various models in the future.

IBM Research's analog AI chip represents a significant advancement in deep learning inference capabilities, offering energy efficiency and compute performance comparable to digital systems. This innovation paves the way for realizing a high-speed and low-power analog AI inference accelerator chip.