With YouTube designing its own custom chips, the company joins a growing group of big tech companies seeking to offer something unique in the data center. Operating behind the scenes, Argos made YouTube’s data centers much more efficient.

Roughly seven years ago, Partha Ranganathan realized Moore’s law was dead. That was a pretty big problem for the Google engineering vice president: He had come to expect chip performance to double every 18 months without cost increases and had helped organize purchasing plans for the tens of billions of dollars Google spends on computing infrastructure each year around that idea.

But now Ranganathan was getting a chip twice as good every four years, and it looked like that gap was going to stretch out even further in the not-too-distant future.

So he and Google decided to do something about it. The company had already committed hundreds of millions of dollars to design its own custom chips for AI, called tensor processing units, or TPUs. Google has now launched more than four generations of the TPU, and the technology has given the company’s AI efforts a leg up over its rivals.

But as Google was developing the TPUs, the company figured out that AI wasn’t the only type of computing it could improve. When Ranganathan and the other engineers took a step back and looked at the most compute-intensive applications in its data centers, it became clear pretty quickly what they should tackle next: video.

“I was coming at it from the point of view of, ‘What is the next big killer application we want to look at?’” Ranganathan said. “And then we looked at the fleet, and we saw that transcoding was consuming a large fraction of our compute cycle.”

YouTube was by far the largest consumer of video-related computing at Google, but the type of chips it was using to ingest, convert and play back the billions of videos on its platform weren’t especially good at it. The conversion part is especially tricky, and requires powerful chips in order to do it efficiently.

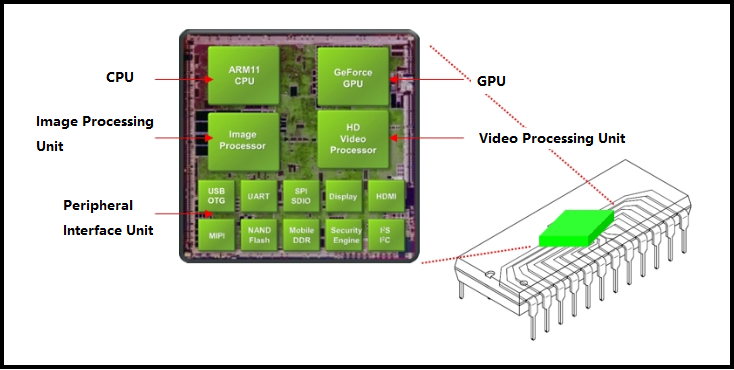

And so converting, or transcoding, videos into the correct format for the thousands of devices that would end up playing them struck Ranganathan as a good problem to spend some time on. Transcoding is very compute-intensive, but at the same time, the task itself is simple enough that it would be possible to design what’s called an application-specific integrated circuit, or ASIC, to get the job done.

“For something like transcoding, which is a very specific, high-intensity sort of workload, they can get an awful lot of bang for their buck there,” chip industry analyst Mike Feibus said.

To get management to greenlight the project in 2016, Ranganathan’s colleague Danner Stodolsky sent an instant message to YouTube vice president Scott Silver, who oversaw the company’s sprawling infrastructure. He asked for about 40 staff members and an undisclosed dollar amount in the millions to make it happen, Silver said.

“It was very, very quick because it just made sense looking at the economics and workload and what we were doing,” Silver said.

Called Argos after the many-eyed monster in Greek mythology, YouTube first disclosed the chip to the public last year in a technical paper that boasted that the new design achieved a 20- to 33-fold increase in transcoding compute performance. Today Google has deployed the second-generation Argos chips to thousands of servers around the world, and has two future iterations in the works.

DIY SOCs

Google’s self-built YouTube chips are part of a growing trend among the tech giants. Amazon has built its Graviton server processors, Microsoft is working on Arm-based server processors, Facebook has a chip design unit — the list goes on.

A common assumption is that big tech companies are getting into chipmaking because it’s an obvious way to save money. Most chip companies operate with a gross margin north of 50%, so by moving the chip design process in-house, tech companies can theoretically save an enormous amount of money.

But that’s not the case, according to Jay Goldberg, principal at D2D Advisory. For one thing, the economics don’t make sense — it’s not worth the massive effort to hire and nurture chip designers to save a few dollars on the margin front. A new advanced chip can cost hundreds of millions of dollars to simply build a prototype, which can then cost tens of millions of dollars to perfect.

“Our focus is not really on saving money,” Silver said. “We like saving money, but what we really want to do is deliver an as-good — if not a better — quality experience for viewers.”

The motive is actually pretty simple: The big tech companies are designing their own chips to create a strategic advantage.

“Typically what that means is you have some software that you want to tie to the chip, and you get a big performance gain,” Goldberg said. One of the earliest and best-known examples is Google’s TPU, which it developed to tackle AI tasks in its data centers.

For certain workloads, “the TPU reduces the number of data centers they have to build by 50%,” Goldberg said. “At $1 billion a pop, that’s a lot of savings.” While saving money on data center construction, it also gave Google Cloud something it could offer that Microsoft Azure and AWS didn’t have at the time.

But another part of the motivation behind custom chip designs can be traced to the significant consolidation in the chip industry over the past 20 years. About 20 years ago, there were dozens of companies vying to make chips that the big tech companies wanted, and that fierce competition led to lots of competing designs to choose from.

Today there are only one or two large chipmakers in most categories — especially for data center processors — which has meant the cloud giants can’t get the custom chips they want. Instead, they are left using the general-purpose processors built by companies like Intel and Nvidia, which aren’t bad but are relatively homogenous.

“What’s really at stake here is controlling the product road map of the semiconductor companies,” Goldberg said. “And so they build their own, they control the road maps and they get the strategic advantage that way.”

Just push ‘play’

YouTube calls the Argos chip a video-coding unit, or VCU, and its main job is to convert the 500 hours of video that’s uploaded to the site every minute into the various screen formats and compression formats that are necessary to play back videos on the multitude of devices used to watch YouTube, ranging from smartphones to TVs to laptops. Sometimes that means there are as many as 15 variations of each video.

Even though the purpose of the chip was straightforward, and Ranganathan and the team of engineers had a clear idea of what they wanted it to accomplish, it was no small undertaking to invent a piece of silicon. The scale required by YouTube’s operation alone presented enormous challenges that forced the team to think through the design from the silicon itself, all the way through how YouTube would lay out the boards the chips were attached to, the design of the racks in its data centers and how it configured each cluster.

“If an accelerator lands in the fleet and nobody uses it, did it really land?” Ranganathan said. “You can build amazing hardware. But if you don't build it in a way that our software colleagues can use it, and it can actually work — there is compilation and tool, and debugging and deployment and so on.”

To Ranganathan, creating the hardware was only a portion of the task: “It’s the tip of the iceberg,” he said. Digging into how to integrate the Argos chips into the company’s data centers and operate them at YouTube’s scale required close collaboration between the software and hardware engineers.

As a result, Argos is a piece of hardware defined by software, which meant that the engineers working on the chip could use what are called high-level synthesis techniques to iterate on the design much more quickly. Google developed its own version of high-level synthesis software called Taffel that it used to help make the TPUs and the Argos processors.

“This notion of using a software-centric approach to designing hardware was something that we really pushed on very hard in Argos,” Ranganathan said.

“What’s really at stake here is controlling the product road map of the semiconductor companies.”

One of the other examples of close hardware-software collaboration Ranganathan cited was how the engineers figured out how to work around VCU units that fail in the field and a problem called “black holing,” which refers to wasting resources on chips that fail after they are deployed. Essentially, the team came up with a way to detect failure and reroute traffic.

The first version of the Argos chip simply aimed to take the existing video workload that YouTube was transcoding and complete it more cheaply. Those savings allowed YouTube to begin transcoding many more videos into superior video encoding formats that use considerably less data but offer the same image quality. Smaller files carry enormous benefits: They cost less to store and serve, they allow carriers to use less bandwidth and they deliver faster load times to consumers.

“The thing that we really want to be able to do is take all of the videos that get uploaded to YouTube and transcode them into every format possible and get the best possible experience,” Silver said. “The problem is just intractable. And what this did is it took a major bite out of that apple.”

Similar to most of the silicon that’s used to power data centers, the existence of the Argos chip will go completely unnoticed by the hundreds of millions of people watching YouTube, or using Google’s other video products. Silver said that the company hadn’t observed a response to the VCU’s introduction in any of the markets in which YouTube operates around the world.

But that’s not entirely the point. YouTube is decidedly better because it used Google’s custom-built chips to achieve something that would have been completely unthinkable for the earliest companies operating on the internet.

Still, it’s not enough that Google built one generation of its VCUs that can compete with the chips made by Nvidia, AMD or Intel. Google needs to stay years ahead of the semiconductor giants for it to even begin to make the proposition of a custom chip make any sense; otherwise, it makes more sense to wait for one of them to do it.

But for YouTube, it makes a lot more sense to design a piece of silicon that’s really good for one purpose and leave the more complex, less-defined problems for the expensive chips that can handle any kind of computing.

“If you think about machine-learning training or inference — these are just like really big, big and interesting workloads that are not served well, or certainly weren’t served well, by CPUs,” Silver said. “You could argue that maybe they’re served well by GPUs … but if most of your computers are transcoding videos and you’re paying whatever, many tens of millions or hundreds of millions of dollars a year to do that, it would become obvious that you have room to [invest in] an ASIC to do that.”

By Max A. Cherney, source https://www.protocol.com/enterprise/youtube-custom-chips-argos-asics